Such events are caused by an explosion of long-term components, which can grow exponentially more than short-term ones. On the other hand, the Exploding gradients problem refers to a large increase in the norm of the gradient during training. The weights can no longer contribute to the reduction in cost function(C), and go unchanged affecting the network in the Forward Pass, eventually stalling the model. This is what we call Vanishing Gradients. The translation of the effect of a change in cost function(C) to the weight in an initial layer, or the norm of the gradient, becomes so small due to increased model complexity with more hidden units, that it becomes zero after a certain point. What about deeper networks, like Deep Recurrent Networks? Pretty cool, because now you get to adjust all the weights and biases according to the training data you have.

It tells you about all the changes you need to make to your weights to minimize the cost function (it’s actually -1*∇ to see the steepest decrease, and +∇ would give you the steepest increase in the cost function). The Backpropagation algorithm is the heart of all modern-day Machine Learning applications, and it’s ingrained more deeply than you think.īackpropagation calculates the gradients of the cost function w.r.t – the weights and biases in the network.

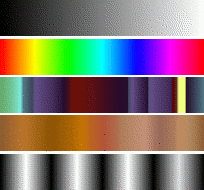

Vanishing and Exploding Gradients in Neural Network Models: Debugging, Monitoring, and Fixing Common problems with backpropagation

0 kommentar(er)

0 kommentar(er)